Introduction

Introduction

I once received a long writing sample from a technical communication applicant who had written about a complex medical device. The sample demonstrated all the marks of quality: clean and consistent design, intuitive organization, and user-friendly concepts and procedures distilled for a non-technical audience. It seemed like a pretty standard case of evaluating writing samples.

After some digging, however, I discovered that the applicant had played a very limited role in producing the sample. Not only was the template completely outside of his control, but so was the organization of the content. He had in fact only written a few subsections, which someone else on his team heavily edited.

Later at a different job, I worked with a recruiter who actively sourced job candidates for openings on my team and sent me applications to review. Most of them were solid, but occasionally I would see writing samples of highly questionable quality. In such cases I knew we could probably have saved time by applying our standard for quality earlier in the sourcing process to weed out such applicants.

I use these anecdotes to underscore two of the biggest challenges with evaluating writing samples during the hiring process. First, it is all too possible for applicants, whether unconsciously or not, to create a misleading impression of their writing and design abilities. Second, what counts as good writing and design is fairly subjective. You and your team may hold different opinions concerning the quality of the samples that an applicant submits. Or, you may be working with someone who is not specialized in technical communication and who therefore relies on intuition and experience rather than explicit criteria.

You can choose from several ways to address these challenges, but my experience has shown two methods that work extremely well: 1) establishing a list of explicit evaluation criteria for your team; and 2) creating a list of structured follow-up interview questions. For the team I currently work on, these tactics played an important part in helping us identify top talent and ultimately hire five new technical writers to join us.

Strategy 1: Evaluation Criteria for Writing Samples

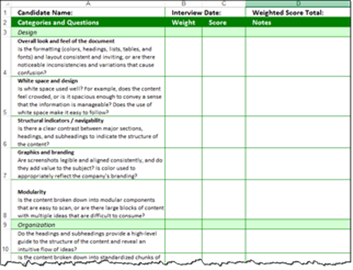

My team and I first established an agreed-upon list of evaluation criteria to guide our writing sample reviews. We based the list on common conventions in the industry and on hard-won experience we gained in wading through hundreds of documents. One of the ancillary benefits of the list came from sharing it with recruiters and other employees in our organization who were involved in the interview process but who were not experts in technical communication. We could all rely upon a shared vocabulary to describe what we liked or didn’t like about an applicant’s work. We could also use the list to help with our goal of treating all applicants as equitably as possible.

Design

- Is the overall look and feel of the document—colors, headings, lists, tables, and fonts—consistent and inviting, or does it contain noticeable inconsistencies and variations that cause confusion?

- Is white space used well? For example, does the content feel crowded, or is it spacious enough to convey a sense that the information is manageable? Does the use of white space make it easy to follow?

- Is there a clear contrast between major sections, headings, and subheadings to indicate the structure of the content?

- Are screenshots legible and aligned consistently, and do they add value to the subject? Is color used to appropriately reflect the company’s branding?

- Is the content broken down into modular components that are easy to scan, or are there large blocks of content with multiple ideas that are difficult to consume?

Organization

- Do the headings and subheadings provide a high-level guide to the structure of the content and reveal an intuitive flow of ideas?

- Is the content broken down into standardized chunks of information that are easy to consume and would be easy to update later when necessary?

- Are there cross-references to related topics or next steps?

- Is the table of contents (if there is one) user friendly, showing intuitive groupings of topics and a logical progression of information?

Style

- Is the writer’s style clear, user-friendly, and easy to understand, or are there a lot of cases of jargon and acronyms?

- Are the sentences relatively short, direct, and active, with the main point usually coming sooner rather than later, or are they long and filled with too many details?

- Are unfamiliar labels, terms, and concepts introduced when appropriate and then used consistently later on in the document?

Technical Complexity

- Does the content tackle a complex technical product, process, or topic and explain it in simple terms a non-expert can understand? Or does it explain a subject that is already relatively simple to grasp?

Diversity of Samples

- Do the samples demonstrate an ability to tackle different subjects, formats, and genres? For example, do the samples address subjects like how to effectively use a software tool or how to follow a new work process?

- Do the sample formats range from print-based help to web-based help to video tutorials? Do the samples include newer content forms such as microcopy (application tooltips or UI copy), chat bot scripts or social media messaging?

- Do the samples cross genres, such as instruction manuals, technical reports, reference materials, project documentation, or training modules?

Grammar and Mechanics

- Does the content follow appropriate conventions in punctuation, word usage, and sentence structure?

- Are there obvious errors in grammar or word choice that would cause you to question the writer’s attention to detail?

Having a clear set of criteria for evaluating writing samples supports an equitable process to get qualified technical communicators on board. Download this template from the TechWhirl Template Library.

It is important to note that an answer of “yes” or “no” to any of the questions above did not automatically disqualify an applicant. Rather, it was the cumulative number of answers in either category that mattered, as well as the consistency of those answers across the evaluators.

We tended to give more weight to some criteria over others. For example, we placed greater emphasis on logical organization and clear explanations of complex ideas over perfect grammar (although these qualities usually went hand in hand). The weight that you assign to each dimension will likely vary depending on the needs of your organization and the position you’re seeking to fill.

We did notice when certain combinations of factors worked in an applicant’s favor. For example, one applicant submitted a technical report on the state of oil fracking in Texas. The document was well-designed and written entirely from scratch, and involved numerous interviews with engineers. This applicant outshone other applicants who had well-written samples, but whose subject matter was simpler in nature and whose role in the sample was far more limited.

We found that writing competence in both print and web mediums (rather than only one or the other) was surprisingly rare, and few applicants presented high-quality online samples. While we did not automatically hire the applicants who submitted web-based documents for our review, we generally gave them more attention. And we tended to look more favorably on the applicants who included a synopsis describing the context of the sample because this saved us time during interviews.

Strategy 2: Follow-Up Questions for Writing Samples

Even if an applicant met our criteria for high-quality samples, we still lacked a complete picture of the situation. We usually couldn’t tell if the applicant wrote the sample from scratch, started from a template, or edited content that was drafted by someone else. So in addition to our evaluation criteria, we built a set of standard follow-up questions to ask during the interview.

- Why did you select that particular sample to share with us?

- Were you the only author or were there other contributors to the document?

- Did you create the sample from scratch or did you have a template?

- How much control did you have over the design and organization?

- What tool(s) did you use to develop the content?

- How did you verify the accuracy of the content and obtain approval for its publication?

- What were some of the challenges you faced in creating the document and how did you overcome them?

- If you had more time and resources, what would you do differently?

The answers to these questions were telling. Sometimes the applicants gave vague or evasive responses, or admitted that they played a limited role. As we gained experience asking these questions consistently, we found that the most competitive applicants could do one or more of the following:

- Explain how the sample was relevant to the job and could speak in detail to each element, even if they played an editorial role as opposed to an authoring role

- Tell a before-and-after story about the challenges or problems they faced and how they overcame them

- Justify particular writing and design decisions, and speak to the outcomes they achieved with the sample

Conclusion: Customize Your Evaluation Strategies

Of course, no single evaluation strategy is perfect for every organization that hires technical communicators. A considerable degree of subjectivity is built into the process for evaluating writing samples, and the particular needs of your organization may lead to you place greater emphasis on some writing criteria and follow-up questions over others. You should therefore customize your evaluation strategies and determine which criteria and questions should receive more or less weight—and also try to anticipate the kinds of answers that you find good, bad, or intermediate. To help you get started, we’ve included a downloadable sample evaluation template that you can use to modify the criteria, add your own weightings, and revise the follow-up questions to fit your needs. Whether you use this template or design your own approach, make your criteria and questions explicit, and apply them as consistently as possible.

Download Richard’s Technical Writing Sample Evaluation Criteria Template from the TechWhirl Template Library.